Merge branch 'cicd' into 'master'

Cicd merge back See merge request hpc-team/ansible_cluster_in_a_box!297 Former-commit-id: 2d3d261e

No related branches found

No related tags found

Showing

- .gitlab-ci.yml 77 additions, 67 deletions.gitlab-ci.yml

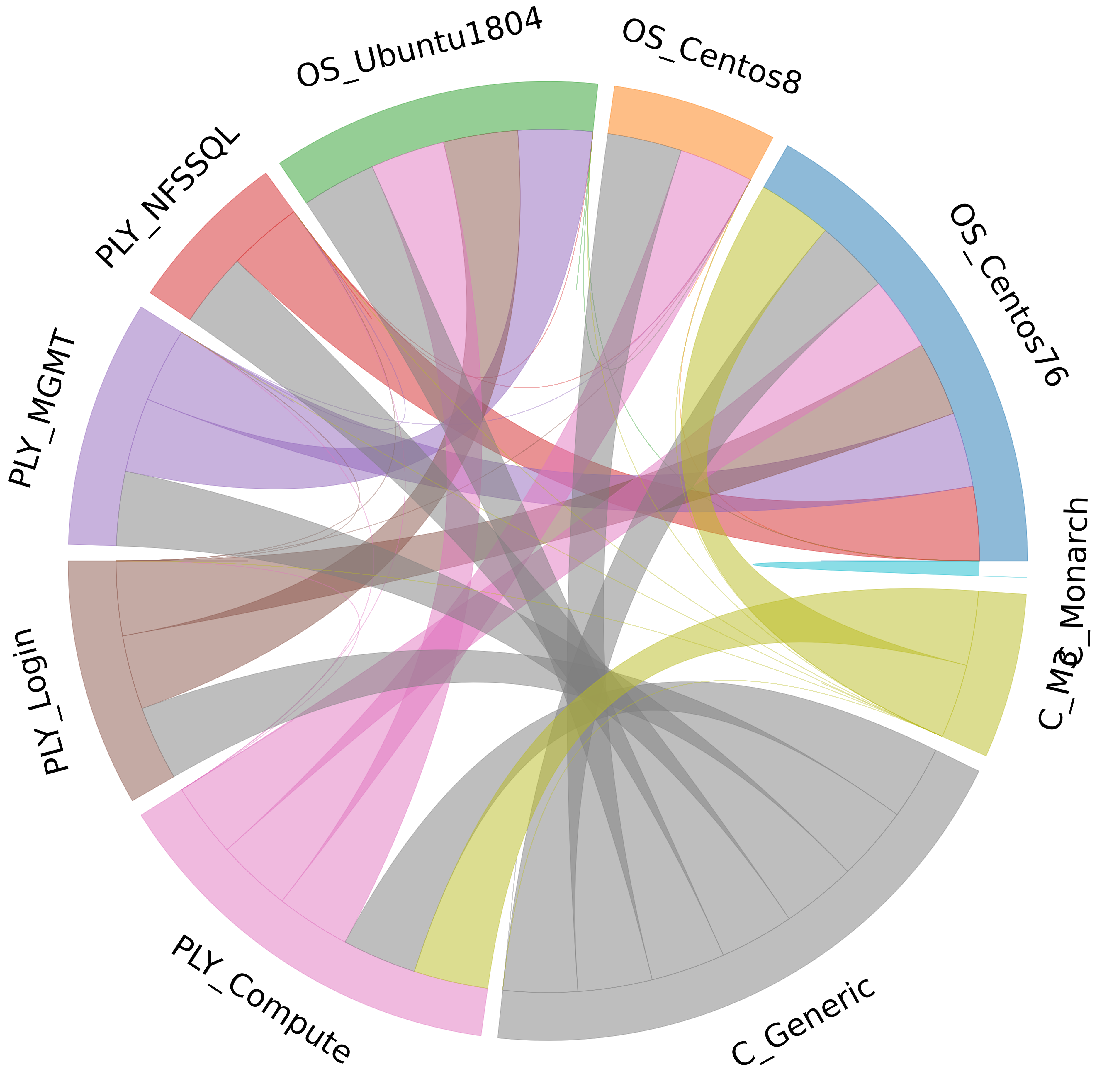

- CICD/ChordDiagramm/Chord_Diagramm - Sheet1.csv 10 additions, 0 deletionsCICD/ChordDiagramm/Chord_Diagramm - Sheet1.csv

- CICD/ChordDiagramm/Chord_Diagramm.png 0 additions, 0 deletionsCICD/ChordDiagramm/Chord_Diagramm.png

- CICD/ChordDiagramm/genChordDiagramm.py 241 additions, 0 deletionsCICD/ChordDiagramm/genChordDiagramm.py

- CICD/ansible_create_cluster_script.sh 0 additions, 4 deletionsCICD/ansible_create_cluster_script.sh

- CICD/heat/gc_HOT.yaml 5 additions, 4 deletionsCICD/heat/gc_HOT.yaml

- CICD/heat/heatcicdwrapper.sh 3 additions, 3 deletionsCICD/heat/heatcicdwrapper.sh

- CICD/heat/server_rebuild.sh 65 additions, 0 deletionsCICD/heat/server_rebuild.sh

- CICD/master_playbook.yml 1 addition, 0 deletionsCICD/master_playbook.yml

- CICD/plays/allnodes.yml 1 addition, 0 deletionsCICD/plays/allnodes.yml

- CICD/plays/loginnodes.yml 1 addition, 0 deletionsCICD/plays/loginnodes.yml

- CICD/plays/mgmtnodes.yml 1 addition, 0 deletionsCICD/plays/mgmtnodes.yml

- CICD/tests/Readme.md 8 additions, 5 deletionsCICD/tests/Readme.md

- README.md 4 additions, 0 deletionsREADME.md

- plays/allnodes.yml 0 additions, 47 deletionsplays/allnodes.yml

- plays/computenodes.yml 0 additions, 64 deletionsplays/computenodes.yml

- plays/files 0 additions, 1 deletionplays/files

- plays/init_slurmconf.yml 0 additions, 15 deletionsplays/init_slurmconf.yml

- plays/make_files.yml 0 additions, 22 deletionsplays/make_files.yml

- plays/mgmtnodes.yml 0 additions, 44 deletionsplays/mgmtnodes.yml

CICD/ChordDiagramm/Chord_Diagramm.png

0 → 100644

1.87 MiB

CICD/ChordDiagramm/genChordDiagramm.py

0 → 100644

CICD/heat/server_rebuild.sh

0 → 100755

CICD/plays/loginnodes.yml

0 → 120000

plays/allnodes.yml

deleted

100644 → 0

plays/computenodes.yml

deleted

100644 → 0

plays/files

deleted

120000 → 0

plays/init_slurmconf.yml

deleted

100644 → 0

plays/make_files.yml

deleted

100644 → 0

plays/mgmtnodes.yml

deleted

100644 → 0